AI is changing how we use technology.

AI is changing how we use technology.

At its core are intelligent agents. Simple reflex agents are basic AI tools that make fast choices based on what they sense at the moment. These simple agents are the building blocks of many AI systems we use everyday.

Quick Summary

Simple reflex agents work on a basic idea. They make choices based only on what they see right now using the set rules. They don't remember the past and also can not plan for the future. They sense their surroundings with sensors, use if & then rules, and take action through actuators.

These agents work best in clear, simple settings where quick responses matter most. Let’s find out more in this blog.

What is a Simple Reflex Agent?

Simple reflex agents are basic AI systems that work on one simple idea - they make choices based only on what they sense right now. Unlike complex AI that uses learning or neural networks, simple reflex agents use a set of fixed rules.

These rules tell the agent how to respond to specific inputs from sensors. The agent then takes actions based on these rules. Simple reflex agents keep things basic. They focus on being simple and fast. They have no memory and only use current sensor data.

This basic design makes them easy to build and understand. They're great for beginners learning about AI. Even though they're simple, these agents can do useful jobs in many areas. They work in robots, automated systems, digital worlds, and games. By using sensor data and following simple rules, these agents can act in ways that seem smart within their limits.

Key Components of Simple Reflex Agent

Simple reflex agents have three main parts that work together:

1. Sensors Condition-Action Rules

With sensors, agents get information about their surroundings. They work like our eyes and ears. Sensors can be simple, like sensors on robots, or complex, like cameras on self-driving cars. They turn realworld information into data which the agent can use. Good sensors are important. If sensors give wrong data, the agent will make bad choices, even if all other parts work well.

2. Sensors

These rules connect what the agent senses to what it should do. They are "if-then" statements that guide behavior. These direct rules help the agent respond quickly.

3. Actuators

Actuators are parts that take action based on the agent's decisions. They change the world around them. In physical devices, actuators might be motors, speakers, or heaters. In software, they control what happens on screen or in a database.

4. State Updater

Some advanced simple reflex agents include a state updater. This part doesn't store memories but helps process current sensor data more accurately.

5. Utility Function

Some advanced versions use a utility function to choose between multiple actions when more than one rule applies. This function helps pick the best action when there are choices.

6. Sensors and actuators

Sensors detect changes in the environment, while actuators respond by performing actions. Together, they help machines sense and interact with the real world effectively.

How Simple Reflex Agent Works

Simple reflex agents follow direct process. Here's the basic code that shows how they work:

function SimpleReflexAgent(percept): state = InterpretePercept(percept) action = Rules(state) return action

function InterpretePercept(percept): Extract relevant information from the percept Return the current state based on the percept

function Rules(state): Apply rules based on the current state Return the action to be taken based on the rules

The agent works through these steps:

-

Rules

This function applies the if-then rules to decide what to do. It takes the current state and matches it to the right action.

The rules can be simple or more complex, but they all connect specific situations to specific actions.

The SimpleReflexAgent only reacts to what it senses right now. It doesn't think about the past or plan for the future. This makes it perfect for situations where quick, simple responses are all that's needed.

-

InterpretePercept

This function turns raw sensor data into useful information. It figures out what's happening in the environment.

For example, in a robot vacuum, sensors might detect light or resistance. InterpretePercept turns these signals into clear states like ‘found dirt’ or ‘hit wall.’

-

SimpleReflexAgent

This main function controls the whole process. It:

- Gets sensor data

- Sends this data to be interpreted

- Uses rules to decide what to do

- Returns the action to take

It acts as the brain of the operation. It connects all parts together.

Also Read: Best AI Agents for Business to Improve Productivity in 2025

Applications of Simple Reflex Agents in AI

Simple reflex agents in AI are used in applications like robotics, gaming, and automation systems, where quick, rule-based responses to current inputs are needed.Simple Reflex Agents are used in various domains:

1. Thermostats

HVAC thermostats work as simple react tools. They sense heat and turn on heat or cool air based on set points. These tools always check room heat with sensors. They match this to a wanted heat level. Using simple if-then rules, they turn on heat or cool air to keep rooms nice.

New smart thermostats add time plans. But at heart, they still work on the simple react plan: if too cold, heat; if too hot, cool. This plain way has kept our homes comfy for many years.

2. Vacuum Cleaning Robots

Vacuum robots move through simple spaces with few spots and dirt sensors. They react when they find dirt. Basic robot vacuums use sensors to find things in the way and dirt. Then they use if-then rules to move around. When they hit something, they turn. When they find dirt, they suck harder or turn on brushes.

Early models like the first Roomba mostly used simple react actions. They had bump sensors and dirt finders to move without making any maps. This shows how simple rules can make what looks like smart actions. A robot working just on react rules can seem to "hunt" for dirt or clean a room step by step.

3. Traffic Light Control

In basic traffic tool sets, traffic lights change signs as reflex tools. They follow rules like time gaps or sensor inputs. When sensors find cars waiting at a red light with no cars crossing, the box uses the rule to change the sign.

While new traffic tool sets have grown smarter, many road meets still use simple reflex boxes. People trust how well they work and how they act the same way each time. These tools show how reflex tools can run key road parts where safety and doing the same thing matter most.

4. Automatic Doors

In basic traffic tool sets, traffic lights change signs as reflex tools. They follow rules like time gaps or sensor inputs. When sensors find cars waiting at a red light with no cars crossing, the box uses the rule to change the sign. While new traffic tool sets have grown smarter, many road meets still use simple reflex boxes. People trust how well they work and how they act the same way each time. These tools show how reflex tools can run key road parts where safety and doing the same thing matter most.

5. Elevator Control

Simple reflex tools in small buildings or slow spots run lift control boxes. They act when buttons are on and sensors get input. They do set tasks - if a floor button is pushed, go to that floor; if door sensors find something in the way, open the doors again.

While new tall lifts use smart timing plans, many plain lifts still use reflex-based control. It works well and is easy to set up. This use shows how even simple tools can handle harder choice cases. They do this through well-made rule sets.

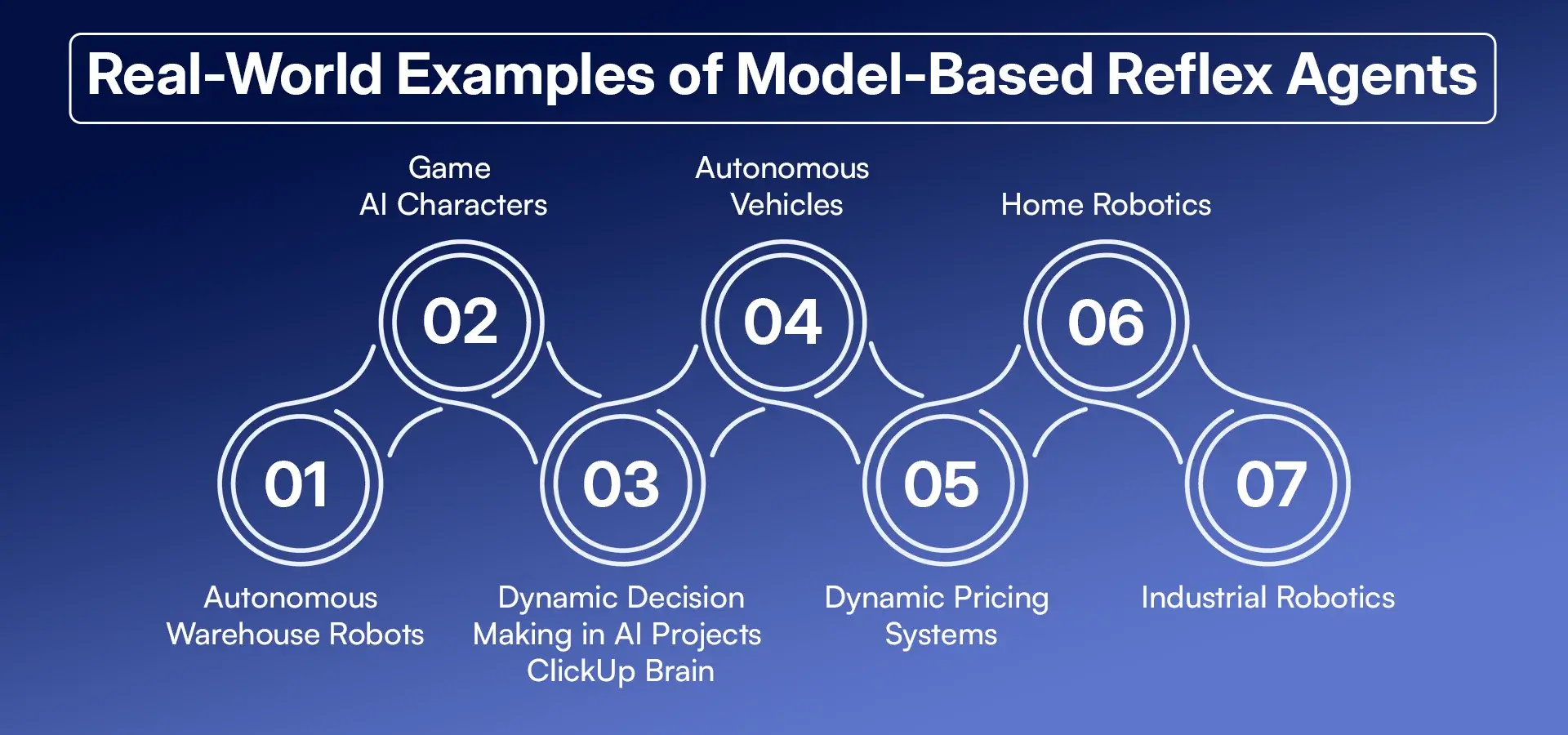

Real-World Examples of Model-Based Reflex Agents

As we move beyond simple reflex agents, we enter the domain of model-based reflex agents - an evolutionary step up that adds internal models of the environment while maintaining reactive decision-making. Here are some real-world applications:

1. Autonomous Warehouse Robots

Robots moving in stores or taking packs use maps in their heads. They fix their maps when new things block the way. This helps them find good paths and not bump into things.

For example, Amazon's robots, Sequoia and Digit, use brain-map tools to move on store floors. They don't bump into workers or other robots. They pick and move things well. They do this with a map that gets fixed all the time.

2. Game AI Characters

In video games, fake people often use brain-map tools. They act smart when real players do things. For example, Ubisoft puts this tech in games like Assassin's Creed. Bad guys in the game use maps in their heads. They try to guess what players will do. They might run away or call friends if they think they will lose.

This makes games more fun to play. A well-made fake person might seem to have big plans. They might seem to change how they act. But they just follow if-then rules with a simple brain map.

3. Dynamic Decision-Making in AI Projects - ClickUp Brain

ClickUp Brain uses brain-map tools in work spaces that change and where teams work together. It uses maps in its head about tasks, team setup, and project facts. It gives fast answers. It does tasks on its own. It makes work flow better.

One of its best parts is making choices based on what's going on. ClickUp Brain looks at current projects. It checks which team people are free. It sees past trends. It finds stuck points. It offers fixes. For example, if a key team person has too much work, it can say to move tasks. Or it might say to change time plans. This keeps projects running well.

ClickUp Brain's AI Knowledge tool lets users tap into company facts. It gives fast, right answers to questions about what's going on. Its AI Sum-Up tool can turn hard facts into steps to take. Its guess-ahead tools use old data to spot coming risks. It offers fixes before things go wrong.

4. Autonomous Vehicles

Self-driving cars show one of the best uses of brain-map tools. These cars keep deep maps of what's around them. They add road maps, traffic flow, rain or sun, and what other cars might do.

Tesla's self-driving system is a top example of brain-map tools. It builds a live map of the road in its head. It adds where cars are. It notes speed. It checks for rain or sun. It makes quick choices. This mix of speed with looking ahead works well for self-driving cars. They must act fast to avoid sudden risks. But they must also know the bigger traffic picture.

5. Dynamic Pricing Systems

Big web shops like Amazon use brain-map tools in their price change systems. These tools look at past buying trends. They check what other shops charge. They see live wants. They change product prices on the fly.

Much like a brain-map tool, these systems keep a map of the market in their heads. They guess what will happen. They make price plans work best. This keeps them in the race and makes the most money.

6. Home Robotics

New robot vacuums like top Roomba types have grown past simple reflexes. They now use brain-map ways. These tools make and keep maps of your home. They remember room shapes and where tables and chairs are. This helps them clean better.

Unlike their plain old types that bounced around rooms with no plan, these new robots fix their brain maps with each clean. They learn the best paths. They find spots that need extra work.

7. Industrial Robotics

Boston Dynamics' robot dog, Spot, works in wild work or outdoor spaces. It uses brain-map tools. The quick robot dog uses top brain-map tech to move on hard ground. Its brain map lets it grasp bumpy ground. It can change for new things in the way. It can do tasks from work checks to help after storms. It works with care and does well.

Conclusion

Simple reflex tools are key in AI. They now use cues to make choices. Even though they are plain, they work well in robots, auto tools, and games. They have parts like sensors, rules, and movers to get around well. They react only to what's happening now.

As tech grows, we see change from simple to brain-map reflex acts. Here, tools keep maps in their heads about their space. This helps them make smarter choices. Both types work in many real jobs. They get tasks done with little fuss.

Whether moving a self-driving car or making a big supply chain better, these tools show the power of mixing quick acts with smart planning. They show us that sometimes the most plain fixes can work best. Knowing the basics of AI helps us get the harder systems built on them.

If you're looking to build AI agents that blend reflex speed with smart planning, RejoiceHub offers advanced AI agent development services to help you turn ideas into working solutions.

Frequently Asked Questions

What is the main difference between a simple reflex agent and a model-based reflex agent?

Simple reflex tools make choices based only on what they see now. They don't keep any maps or memories. Model-based reflex tools keep a map of the world in their heads. This map tracks how things change. It shows how the tool's actions affect things. This brain map helps model-based tools handle spaces they can't fully see. It helps them make smarter choices.

Can simple reflex agents learn over time?

In their pure form, simple reflex tools do not learn or change over time. They have no memory. They work only on set if-then rules. For real learning skills, more smart tool types with learning parts are needed.

Are simple reflex agents still relevant in today's advanced AI landscape?

Yes! Even with growth in deep learning and more complex AI types, simple reflex tools still matter. They work well where quick, known responses are key. They're often used in safety tools, basic control tools, and as base parts in more complex tools.

What environments are best suited for simple reflex agents?

Simple reflex tools work best in spaces they can fully see. Here, what they see now gives all facts needed for choice-making. They're great for still or slow-changing spaces with clear if-then links. These include basic control tools like heat sets, traffic lights, and simple auto tasks.

How can I implement a simple reflex agent for a basic application?

Making a simple reflex tool has three main steps: First, list the things your tool might sense. Second, list the actions your tool can take. Last, make a set of if-then rules that link what it sees to what it should do. This can be done as simple if-then lines in most coding speak.