If you have ever trained a machine learning model, you must have heard the term “Epoch”. When I was experimenting with neural networks for the first time, I used a line many times, which was “Train the model for 50 epochs.”

When I was studying machine learning, I often wondered what exactly an epoch is. Over time, when I built multiple models and tested them with different configurations, I realized that “Epoch” is one of the most critical hyperparameters of machine learning. If we understand this in simple language, then for now, you can understand it like this: “that one epoch represents one cycle when a training algorithm is created through the entire dataset.

But there is a lot to know about epoch, so if you are a computer engineering student, then I would request you to read this article completely, in which we will understand in detail about the working of epoch and its mechanisms, and I promise that you will get a lot of in-depth knowledge in this one article.

Quick Summary

While studying or operating in machine learning, we come across many such terms, but many beginners get confused with the term "Epoch"

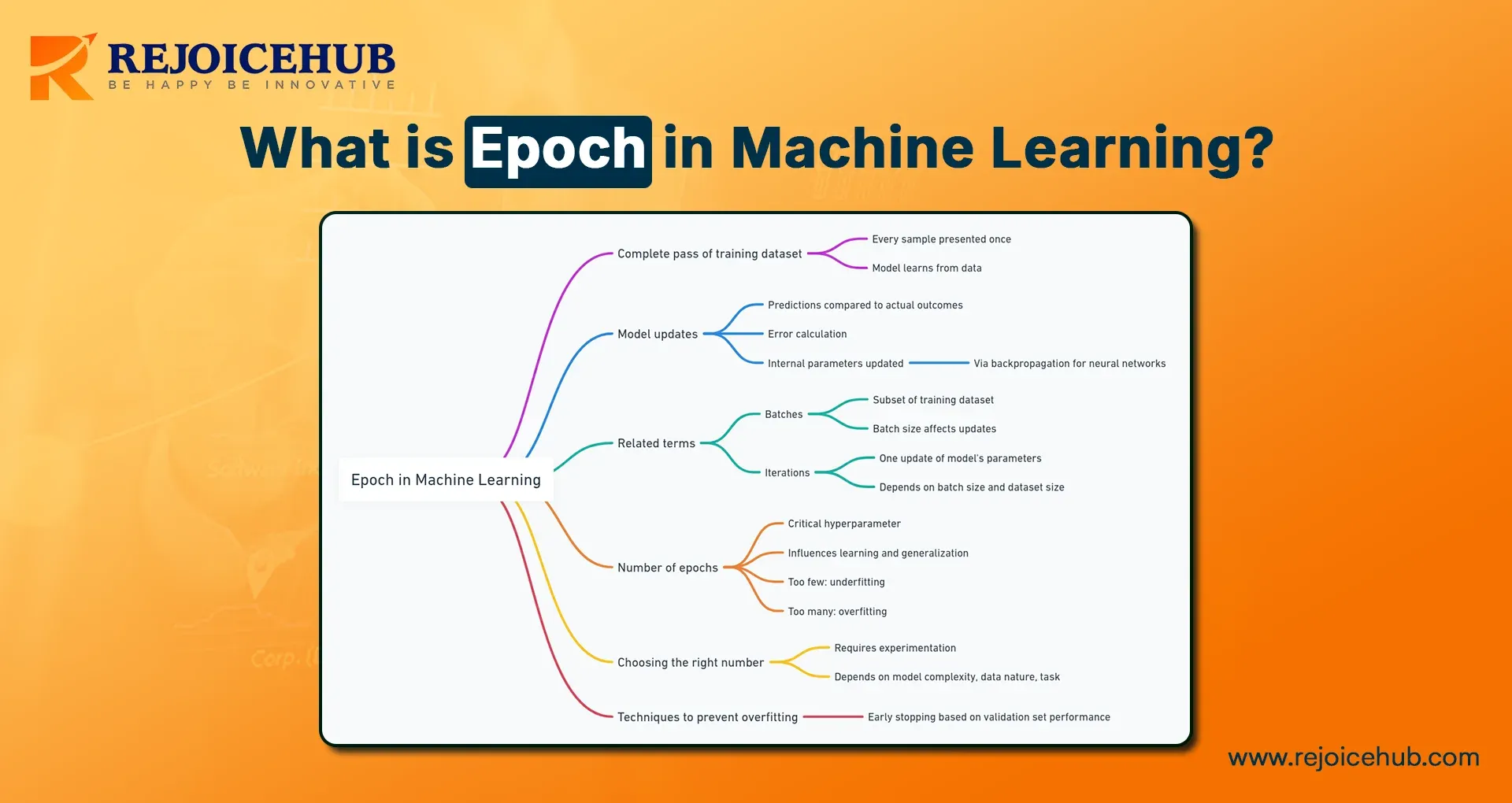

What does it mean? The epoch in machine learning is one complete pass of the entire training dataset through the algorithm. As we know that datasets are very large, which is why it is divided into batches. And all the batches are taken through a model, and this is called iteration. And these multiple iterations form an epoch.

Our Focus in this article:

1. What is an Epoch?

2. What is the mechanism of Epoch, and how does it work?

3. What are the examples of Epoch, and what are its advantages and disadvantages?

4. We will also try to understand different terms of machine learning.

What Is Epoch?

In machine learning, an Epoch simply means using the entire dataset for training once. Imagine you have 10,000 images of cats and dogs. If you apply these 10,000 images to the model once, it means you have completed one epoch.

The role of epoch is to give the model a chance to learn from the training data. During an epoch, the algorithm calculates and updates errors based on its internal parameters, such as weights and biases. As multiple epochs are completed, these adjustments gradually refine the model. This allows us to generalize better to unseen data.

From my practice, I have noticed that models require hundreds of epochs to converge, especially if you deal with complex datasets such as images, speech, or natural language.

- Epoch = One full pass over the dataset.

- Iteration = One batch update step.

- Batch = A subset of the dataset used in one iteration.

The number of Epochs is a very crucial hyperparameter. Because too many epochs can cause underfitting and too many epochs can lead to overfitting, which leads to wastage of resources.

Example of an Epoch

Let's try to understand this with a simple example.

First of all, suppose you are training a model from a dataset of 1000 handwritten digit images. If you pass all these 1000 images simultaneously to the model, then it is considered as 1 epoch.

- After 1 epoch, the model has seen 1000 samples simultaneously.

- After 10 epochs, the model has seen those 1000 image samples 10 times.

If you understand this in simple language, you can understand it like this: when you read a book once, you get basic knowledge, but when you revise it multiple times, you get expertise in that concept.

What Is Iteration?

I personally like this concept a lot. Let's understand Iteration. Mostly, large datasets do not fit in memory at once. So instead of processing the entire dataset at once, it is broken into smaller parts, and this is called a batch.

Once the model processes 1 batch, it is called an iteration.

For example:

- Dataset size = 5000 samples

- Batch size = 500

- Iterations per epoch = 5000 ÷ 500 = 10 iterations

- So, in this case, one epoch = 10 iterations.

In real-world experiments, I have found that smaller batches, say 32 or 64, give better model generalization because they update the weights more frequently, and the same frequent batches speed up the training, but they require more epochs.

What Is a Batch in Machine Learning?

A Batch is a subset of your training dataset. Instead of storing the entire dataset in memory at once, we split it into smaller groups.

Why does this matter?

1. Efficiency: Mostly, this is done because training smaller batches requires less memory, and this greatly enhances the training efficiency.

2. Performance: Smaller batches make it possible to get frequent updates, which helps models to converge faster.

3. Flexibility: Batch size can be tuned as a hyperparameter so that we can balance its speed and accuracy.

There are three common approaches

- Batch Gradient Descent: It uses the whole dataset and lets you assume that the batch is equal to the dataset size.

- Stochastic Gradient Descent (SGD): In this, 1 batch size is considered as 1 update on each sample.

- Mini-Batch Gradient Descent: In practice, batch size between 2 and the dataset size is considered most common.

According to my personal experience testing, in deep learning, 32, 64, or 128 mini-batches work best.

How Epochs, Batches, and Iterations Work Together?

Let's try to understand this in simple language, as its basic definition is quite complex.

- Epoch: One complete pass over the dataset.

- Batch: This allows you to call a small group of samples.

- Iteration: This allows you to call the next step of the update, which comes after processing one batch.

Example:

Dataset size = 10,000 samples

Batch size = 500

Iterations per epoch = 10,000 ÷ 500 = 20 iterations

If you train for 5 epochs, that’s 100 iterations in total.

Here’s how they complement each other

- Batch size determines how much data is processed per update, and this factor is very important in training.

- Iteration updates the model frequently, so that errors can be corrected in its responses.

- And Epoch ensures that the model doesn't miss any data points.

Mathematical Formula for Epoch, Batch & Iteration

You can express the mathematical formula of approach, batch & factors like this:

Total Iterations per Epoch = (Total Dataset Size / Training Batch Sample) × Number of Epochs

Total interactions = Epochs × Integrations per Epoch

Formula Sources:

For example:

- Dataset size = 50,000 samples

- Batch size = 100

- Epochs = 20

Iterations per Epoch} = {50,000}{100} = 500

Total Iterations} = 20 \ times 500 = 10,000

This calculation helps you plan how long your training will take.

Difference Between Epoch and Batch in Machine Learning

Let us try to understand the difference between Epoch and Batch in Machine Learning by breaking down the difference between Epoch and Batch in Machine Learning.

| Feature | Epoch | Batch |

|---|---|---|

| Definition | One full pass over the dataset | It is a subset of the Dataset |

| Training Impact | It improves convergence and accuracy | It affects speed and memory usage |

| Size | It is equal to the Dataset | User-defined, 1 to dataset size |

| Frequency | Mostly, it occurs multiple times in training | It occurs multiple times within an Epoch |

How to Decide the Right Number of Epochs?

This is a very important question, because what should be the right number of epochs depends on many factors and training purposes. Let's try to understand it in simple steps.

1. First of all, you start from the baseline like for example, 50 or 100 epochs.

2. Monitor validation loss: If you feel that the loss has stopped decreasing, then you should stop it at that time.

3. Use early stopping: Mostly, the modern systems automatically halt the training if they feel that there is no improvement.

4. Experiment with learning rate decay: This will be very helpful in refining your training for later epochs.

Remember: There is no universally perfect number; it depends on your dataset size, model complexity, and problem type, but if you do not have deep knowledge of machine learning, then I would prefer you to get your model trained by firms like RejoiceHub, to avoid Errors.

Advantages of Using Multiple Epochs in Model Training

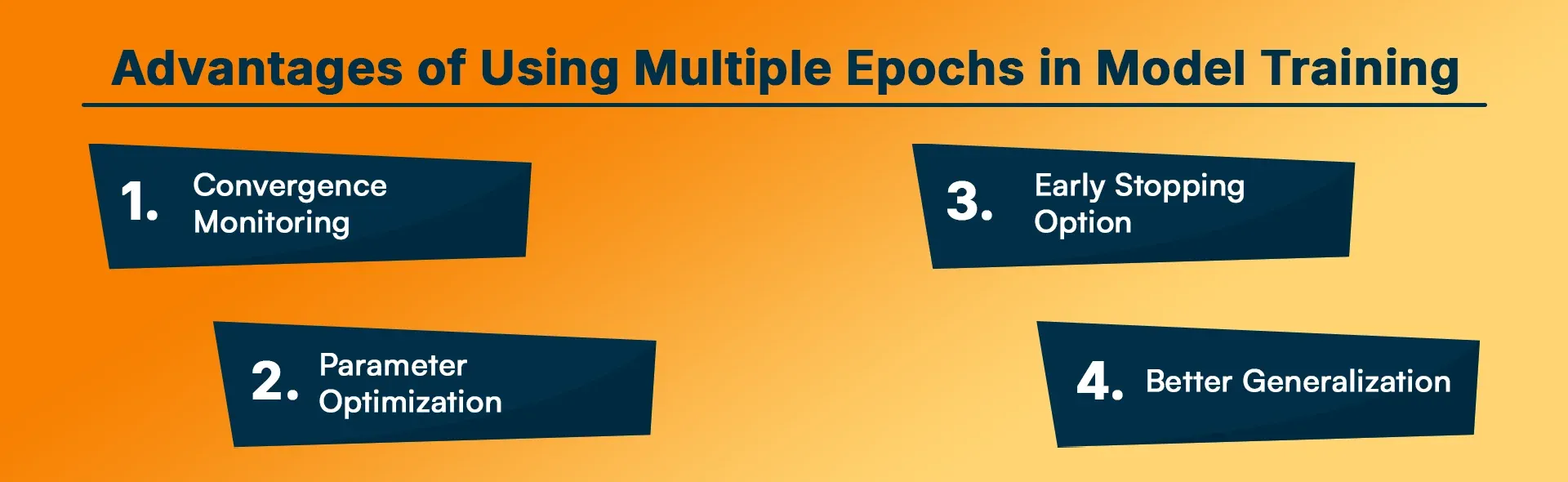

If multiple epochs are used in your training, then you can get several benefits from it, such as:

1. Convergence Monitoring: In this, you can check in detail how your model is making losses and how its accuracy is changing with time.

2. Parameter Optimization: Any model will work accurately only when its proper optimization is done, so in machine learning, it is said that the more epochs there are, the more opportunities there will be for weight updates.

3. Early Stopping Option: Always make sure that whenever you find the perfect spot, stop training at the time when validation accuracy peaks, and ensure that your partner company provides an early stopping option.

4. Better Generalization: Whenever the model is improved through multiple passes, it improves the model’s ability to detect hidden patterns.

I have noticed in most projects that deep learning models typically require 50–200 epochs before stabilising.

Also Read: One of the Best Python Libraries for Machine Learning

Disadvantages of Overusing Epochs in Model Training

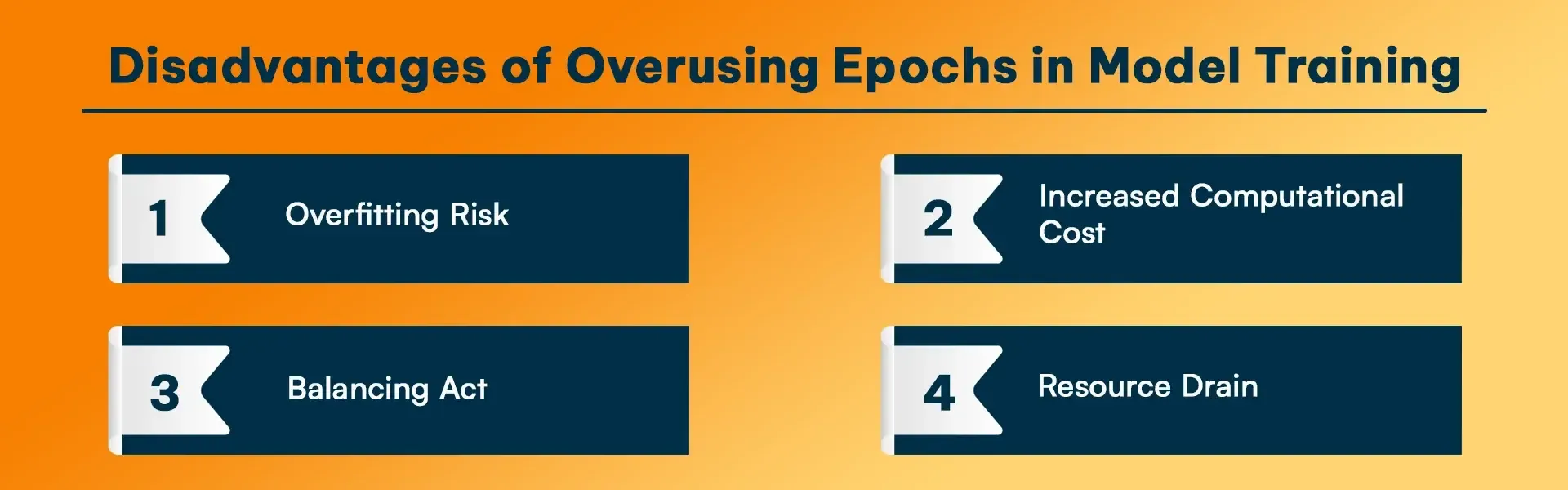

Epochs have as many disadvantages as they have advantages, because when you train too many epochs at once, it can cause many problems.

1. Overfitting Risk: Many times, it happens that the model memorises the training data instead of generalizing.

2. Increased Computational Cost: The more epochs in training the model, the longer the training time.

3. Balancing Act: It is very important in epochs that the training can be balanced, because too few epochs will make it underfit, and too many epochs will waste a lot of resources and efforts.

4. Resource Drain: Model training is quite expensive, because the GPUs and TPUs used in training are quite expensive, resulting in unnecessary resources being burnt if more epochs are used.

My Experience: I have noticed one thing in machine learning: the disadvantages of epochs are visible only when it is being operated by an inexperienced engineer, because with proper knowledge and stability, these disadvantages can be eliminated to a great extent.

Role of Epochs in Deep Learning vs Traditional Machine Learning

While epochs are relevant across all machine learning models, their importance is especially pronounced in deep learning.

-

In Traditional ML Models: Algorithms like decision trees, linear regression, or k-nearest neighbours don’t rely heavily on epochs because they don’t use iterative parameter updates in the same way neural networks do. They are often trained in a single pass or until convergence.

-

In Deep Learning Models: Neural networks have millions (sometimes billions) of parameters. To optimize them effectively, the model requires multiple epochs to repeatedly adjust weights through backpropagation.

From my personal experience: when I trained a simple linear regression model, a single pass was often enough. But when I worked on a convolutional neural network for image recognition, I needed over 100 epochs before achieving reliable accuracy. This difference highlights why deep learning frameworks (TensorFlow, PyTorch, Keras) put so much emphasis on the epoch hyperparameter.

Common Mistakes Beginners Make with Epochs

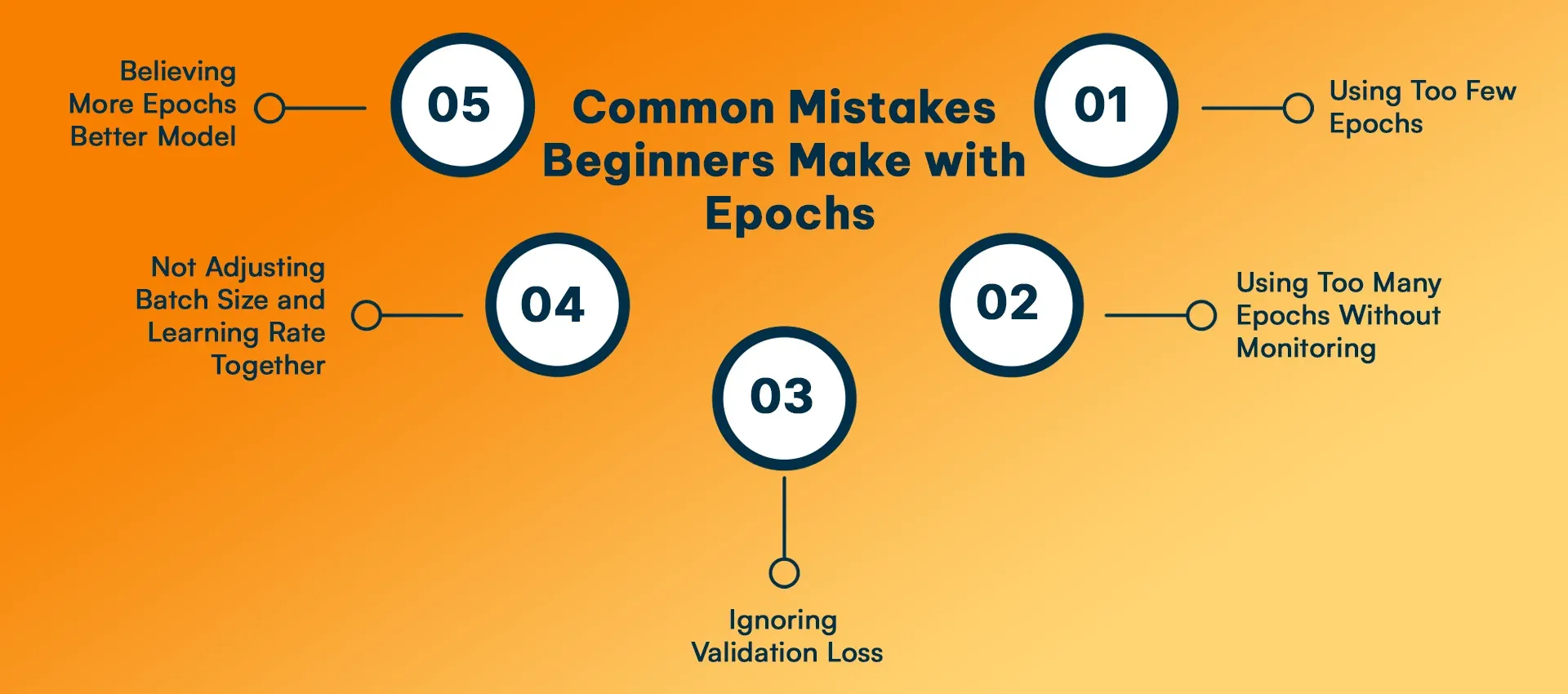

When I first started, I made plenty of mistakes with epochs, and I see beginners repeating the same.

-

Using Too Few Epochs: Stopping too early results in underfitting. The model hasn’t seen the data enough to learn patterns.

-

Using Too Many Epochs Without Monitoring: Training endlessly can cause overfitting and waste precious GPU hours.

-

Ignoring Validation Loss: Beginners often watch only training accuracy, forgetting that validation metrics are the real indicator of generalization.

-

Not Adjusting Batch Size and Learning Rate Together: Epochs don’t work in isolation. A poor combination of batch size, learning rate, and epoch count can ruin training efficiency.

-

Believing More Epochs = Better Model: This is a myth. Beyond a certain point, extra epochs only degrade performance.

Real-World Example: Training an Image Classification Model

Let’s make this even more practical. Suppose you’re training a deep learning model on the CIFAR-10 dataset (60,000 images across 10 categories).

-

Dataset Size: 60,000 images

-

Batch Size: 128

-

Epochs: 50

Step 1: Iterations per Epoch

60,000 divided by 128 is equal to 469 iterations per epoch

Step 2: Total Iterations for 50 Epochs

469×50=23, 450 iterations

During this process, here’s what you’d observe:

-

In the first 10 epochs, the model learns basic features like edges and colours.

-

By epoch 30, it starts recognizing object shapes.

-

By epoch 50, the model reaches strong accuracy but may risk overfitting if pushed further.

This real-world flow shows why monitoring training curves and applying techniques like early stopping is essential.

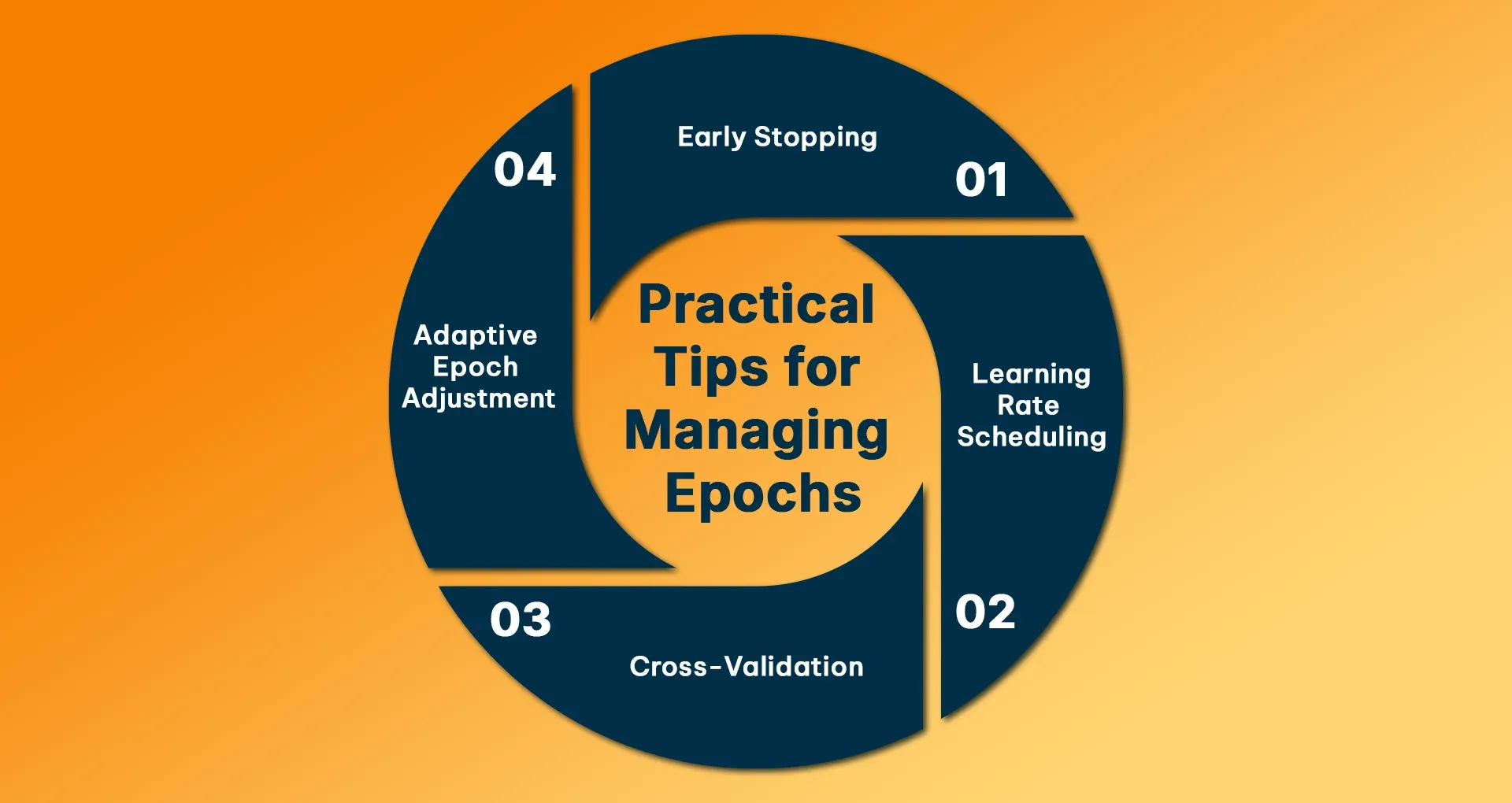

Practical Tips for Managing Epochs

Let's learn about some practical tips which I personally use, and you can also use them.

1. Early Stopping: It is very important to monitor the validation loss in training and stop it when the improvement is stable.

2. Learning Rate Scheduling: As the epochs progress, the learning rate should be gradually reduced.

3. Cross-Validation: Cross-validation is very important in training as it helps in estimating the right epoch count before full training.

4. Adaptive Epoch Adjustment: Adjustment in epoch is very important, so it is said that you start from high and then prune the epochs based on the results.

These practical methods will not only save your time but also ensure that your models achieve the best balance between accuracy and efficiency, so that the working accuracy of the model can be maintained.

Conclusion

Epochs seem to be a very short term to look at, but they play a very important role in machine learning because the perfect training accuracy of a model depends on its epochs. And there is a particular parameter set for perfect training, and these epochs help in measuring that training. If you are a computer engineering student, I would recommend that you understand epochs, batches, and iterations in more detail, as this will help you understand how all three work together in the learning and training process of the model.

Apart from this, if you are a start-up founder, I would recommend that you never blindly set the random number of epochs, but use validation loss, early stopping, and experimentation for this.

This will give a balance of efficiency and accuracy in your training. And the best thing would be to contact an AI or custom software solution firm like RejoiceHub regarding this, so that you can remain tension-free.

Frequently Asked Questions

1. What is an epoch in machine learning?

A complete pass of the entire dataset is called an epoch when the model is trained through it.

2. How many epochs should I train for?

This largely depends on your dataset and model, so start with 50–100 and try to stop early.

3. What’s the difference between epoch and iteration?

An epoch is a full pass over the dataset, and the same iteration is a batch update; both are important parameters of machine learning.

4. Why use batches instead of the whole dataset?

It is used because batches make training more efficient, and they also reduce memory usage and improve overall generalisation a lot.

5. Can too many epochs hurt my model?

Yes, if there are too many epochs, then it can cause overfitting, and the model tries to memorise the training data.

6. Is batch size related to epochs?

Yes, batch size controls how many samples will be processed simultaneously, and it greatly affects the number of iterations per epoch.

7. How do I monitor epoch performance?

To track the performance of an epoch, you should track loss, validation and accuracy curves. If validation loss increases while training loss decreases, then it means that overfitting is taking place in training.