If you too have been following the evolution of artificial intelligence in the last few years like me, you must have noticed one thing, that AI models are getting bigger, faster, and more powerful with time but despite being so advanced, they still make mistakes, which seems quite like a human habit. Sometimes they give irrelevant answers, sometimes they forget what they said before, and they also confidently give wrong information, which does not even exist. The core reason for all these issues is a lack of proper context.

While traditional AI systems were best for pattern matching and statistical predictions, today’s AI models struggle to “understand” context. But they can analyze massive datasets, but without context, like your conversation history, real-world factors, or even basic situational awareness, their answers feel disconnected and shallow. This is why context engineering was introduced for AI models.

Context engineering is the reason why ChatGPT Plus’s model, good in previous conversational memories and situational awareness. Context engineering is going to be the backbone of future technologies.

In today’s article, let us understand context engineering in detail about all its different aspects and uses.

Quick Summary

I have often felt that if ChatGPT could remember my previous conversations, it would be so much better, because it would not require me to share the same information again and again. It was not possible in previous models because they did not use context engineering. But since context engineering has been released, the conversation managing and responding capabilities of AI models have become much more consistent and logical.

Context engineering gives users better personalization, and it also improves accuracy significantly, and the chances of false information also decrease significantly and we get smarter AI agents.

Nowadays, context engineering is very important in AI agents, because without it their personalization is very weak, and models also do not have contextual awareness. And due to thi,s the adaptive memory of AI chatbots becomes very weak.

Our Focus in this article:

1. What is Context Engineering, and how does it work?

2. What is the working process of Context Engineering?

3. In which sectors is Context Engineering used?

4. What is the current status and future of Context Engineering?

What is Context in AI?

You must have heard the term context in your life, but the meaning of this context word is different in different sectors and especially in the AI sector. Let's try to understand "Context in AI" by breaking it down.

1. Understanding Context in Artificial Intelligence

When we talk about "context" in AI, we are saying that the AI system should have such a memory that it can use to interpret the meaning of our input correctly, so that we get the correct answer.

For example, When you tell a friend that it is very cold today, he indirectly feels that you are giving him a hint to turn on the heater, rather than giving him a weather forecast. The answers and actions that happen according to the situation are called contextual awareness.

In computational terms, context can include:

- Situational context: Generally, time, location, and environment are counted in situational context.

- Historical context: Conversational history, prior interactions, and stored memory from any chatbot are counted in the historical context.

- Environmental context: We count real-time sensor data, IoT feeds, or external APIs in the environmental context.

- Social context: User preferences, tone, relationships, or emotional state are counted in the social context.

Humans can naturally process all these contexts, but smart engineering is required to enable AI to understand the context and interpret it accordingly.

2. Context vs. Data: The Critical Difference

Many people think that context and data are the same thing, but in reality, both are quite different. Raw data is just information, like "User clicked on this product", but context brings in previous history, like "User was searching for that product earlier, yet he clicked on it".

For example, if there is a poor context AI, it will give the wrong answer, recommendation, because it does not have the capabilities to understand the query context of human behaviour and give suggestions and answers accordingly. That is why context engineering is becoming so important in today's time.

3. The Role of Context in Machine Understanding

You can call context the backbone of deeper machine learning, because how accurate the answer or action of AI models or agents will be depends on it.

- Enhances semantic understanding: AIs that have context awareness can easily interpret intent, not just words.

- Disambiguates inputs: For example, we know that “Apple” could mean the fruit or the company, but context makes the difference, which word will mean what in a given situation.

- Enables reasoning: In a layered context, A not only responds but also tries to justify the query, adapt it and evolve itself from all these answers.

I have used and tested almost all the models, Chatbots or AI Agents and according to that I have felt that if you talk to a Chatbot without context, then it will feel like you are talking to a stranger even if you have been using that Chatbot for a year. A context-integrated system can track and feel the previous conversation.

What is Context Engineering?

Let us now understand the most important topic, which is “Context Engineering”. Starting from its definition, we will try to understand different aspects so that all your doubts about Context Engineering topics are cleared.

1. Formal Definition and Core Concepts

Context engineering is a systematic approach to designing, managing and delivering, whose core focus is that AI models output any answer based on contextual information. In this, AI models do not rely on static training data and ensure that they have details of relevant data, such as user preferences, past interactions, or real-time data, so that they answer in a contextual format, wherever required.

As far as I understand context engineering in AI systems, their main goal is to collect data, then store its structure and then retrieve information in a contextual way. This is quite similar to knowledge management, the only difference is that this knowledge and information is stored in AI systems and not in humans.

You can understand context engineering as an architecture behind which an intelligent behaviour is involved, which is giving a shape to the architecture as to how AI will reason and how it will reduce misunderstandings and how it will maintain its consistency across sessions.

2. Context Engineering vs. Traditional AI Approaches

Traditional AI models were trained once on massive datasets, but today's powerful models often fail in new situations if they are asked tricky or complex questions.

For example, If you train your business's Chatbot with only FAQ data, it can never adapt dynamically and will have limited answers.

Context engineering changes this by:

- Enabling dynamic adaptation: AI models are able to do dynamic adaptation using real-time data and updates.

- Supporting continuous memory: Its AI models are able to remember past conversations and can also answer you in the current chat accordingly.

- Allowing real-world integration: Contextual AI models have very good capabilities of real-world integration, in which it can connect with any API, document or database.

If understood in short, then contextual awareness makes an AI model really smart.

3. The Context Engineering Lifecycle

Building a context-aware system follows a very complex life cycle. Let's understand it in detail.

1. Identification & Mapping: In this, AI models try to recognise what context is needed for a particular query, and what meaning that particular question may have in the real world, and which query may be relevant according to past conversations.

2. Modeling & Representation: The representation capabilities of many AI models have improved a lot, although Generative AI has contributed a lot to it, but still context engineering has enhanced the understanding capabilities a lot.

3. Integration & Deployment: Contextual awareness has made integration of AI models and then effectively deploying them quite easy.

4. Maintenance & Evolution: AI models are now keeping themselves updated and for this they are storing only relevant and fresh answers.

Nvidia uses context engineering in many of its operations and also supports the Nvidia developer community a lot for context engineering knowledge.

4. Key Principles of Effective Context Engineering

In any model, some basic principles are followed to make context engineering scalable, which are also very critical.

Relevance & Precision: AI enters only very important details in its context window, not all, so that accuracy and data management can be done effectively.

Timeliness & Freshness: Outdated context can mislead AI models, so AI models try to keep their information and knowledge updated.

Scalability & Efficiency: It is very important for context systems to be efficient because they have to handle thousands of queries in real time.

Privacy & Security: Sometimes context-sensitive user data is also confidential, so anonymization and access control are made non-negotiable, so that no user data can be made public.

This is why all the models say that you should not share any of your sensitive data here, and the employees of Microsoft did not follow these rules, due to which a lot of their secret data came on the public chatbot; for this reason, Microsoft restricted their employees to use ChatGPT.

If we understand from the engineering perspective, then the real challenge is not how to give "more data to AI", but rather the problem is how to give the right data to AI, that too at the right time and in the right format. And this is called context engineering.

Why Context Engineering Matters in Modern AI?

As we know that without context engineering, the Chatbot conversations are very weak and its answer also never seems logical, so let us understand in detail why context engineering has become so important in the modern world.

1. The Context Crisis in Current AI Systems

Despite training the LLM model on massive datasets, it often fails to give contextual answers. If you have also used AI agents, then you must have also noticed that many times the Chatbot forgets your previous or current query and gives you an answer out of context. Due to this, the accuracy of the Chatbot and the trust in the data become very weak.

The most common issues are:

- Hallucinations: Often gets confused about basic facts and sometimes invents answers himself, and gives answers that do not make any sense in real life.

- Ambiguity failures: Often, the issue of misinterpreting words and phrases is seen in models because their contextual background is not clear.

- Statelessness: Without context awareness, the Chatbot treats every query as part of a fresh conversation because it does not have any past conversation memory, due to which the conversation not feeling personalized.

Context engineering tries to solve the problem at the ground level, and it uses a dynamic information format so that it can give all types of dynamic answers in a reliable format.

2. Business Impact and Competitive Advantage

Context engineering is not a technical upgrade for businesses, but it is a competitive advantage. There are some basic reasons behind the companies that use context-aware systems.

- Improved customer experience: These chatbots remember the information from previous conversations or past complaints. Due to this, they also use past data in current conversations, which makes the users feel more personalized, and it also feels like a human conversation.

- Better decision-making: Chatbots use context-driven analytics to avoid errors in financial forecasting, healthcare decisions, or logistics planning and try to enhance accuracy.

- Lower costs: Context tries to reduce repetitive queries and automation failures, and also significantly cuts operational expenses for organizations.

In my career, I have seen many businesses that used context engineering and their static FAQ based chatbots improved in quality and increased customer trust and retention.

3. Technical Advantages

Context engineering fixes many engineering challenges and provides many business advantages.

- Reduced complexity: Instead of training giant models in all sectors initially, it is better to provide them with relevant, smaller chunks of contextual data. This does result in less data, but the accuracy of that data is much better.

- Efficient computing: AI reduces compute costs and also reduces device dependency.

- Explainability: When AI uses clear contextual sources, it increases reasoning capabilities even more. This improves the explainable quality of AI models.

This not only makes AI systems smarter but also more sustainable in the long term.

4. Industry Adoption Trends

In 2025, many businesses are implementing context-aware systems in their operations, which is also helping them in many operations.

- Many enterprises are adopting contextual assistants for HR, finance, and customer service.

- The demand for context aware tools is increasing significantly in e-commerce, healthcare, and education sectors.

- Many investors and companies are investing in contextual technologies like retrieval-augmented generation (RAG) and vector databases to make "context engineering" smarter.

Businesses that fail to add context to their automated operations will be at a great risk of losing the competitive edge in businesses.

How Context Engineering Works?

Context engineering is very smart and helps a lot in operations, but it is also very important to understand how this context engineering works.

1. Data Collection and Context Gathering

-

Multi-source Data Integration

The foundation of context engineering is gathering data. Traditional AI training is dependent only on data, and context-aware systems gain information from multiple sources, that too on a real-time basis.

- User interaction history: contextual AI systems remember the history of past queries, clicks, chat logs.

- Behavioral patterns: AI systems have a good knowledge of user behavior patterns, which helps them know about customer preferences, purchase habits.

- Environmental sensors & IoT devices: Context AI carefully checks environmental data, which includes location, temperature, device status. This gives AI models a lot of contextual awareness.

- External APIs & real-time feeds: Many AI models have access to users' financial data, weather updates, news streams and behind this, external APIs make the biggest contribution.

From my experience, I can say that contextual awareness for any model depends to a great extent on external sources and the quality of those sources.

-

Context Discovery Techniques

All queries have different context and these AI models determine which context should be used for which query.

- Many AI systems automatically mine context from unstructured text or logs.

- AI models also store user feedback, such as how a user liked the quality of the output (e.g., thumbs up/down, ratings)

- AI models also use factors and actions such as spending time on chat or searching for products in carts to understand context.

- Many AI models use third-party companies to enhance their services and add missing details.

- All these layered collections further enhance context and make AI models more actionable.

2. Structuring and Encoding Context

-

Context Representation Models

Once the information is gathered, it is very important to structure the context so that machines can understand the user's query.

-

Graph-based models: AI models try to show relationships between different entities.

-

Hierarchical taxonomies: Contextual systems organize context into different categories.

-

Temporal sequences: AI models capture different events or outputs over time.

-

Multi-dimensional vectors: Mostly AI models encode the context in numerical form so that they can answer whatever query is required.

-

Encoding Strategies

No matter how large datasets AI models are trained on, raw data is useless unless it is properly encoded, for this some common strategies are used.

- Vector embeddings are used to record semantic meanings.

- Knowledge graphs are used to represent different knowledge so that relationships can be linked for different contexts.

- Very large data and schemas are used to structure the data, so that all the data is stored properly, due to which AI models do not face any issue in giving any answer, and all the answers are found in a contextual way.

- AI Models use compression techniques to fit data within context window limits.

- All these models ensure that context is both machine-readable and efficient, so that context awareness is equal on the user side and machine side.

3. Feeding Context to AI Models

-

Integration Architectures

Context is injected into AI Models using different approaches, so that data can be made reliable according to the contextual structure.

-

Pre-processing injection: Context is added before adding a query during training of AI models, so that relevant context is available before answering the question.

-

Runtime augmentation: Advance models dynamically fetch contextual awareness during context processing.

-

Post-processing refinement: AI models that return results are validated with context so that answers can be refined and kept relevant.

-

Hybrid approaches: AI models combine with different robustness to maintain accuracy.

-

Context Delivery Mechanisms

To make context delivery mechanisms more scalable, AI systems rely on multiple factors.

- API-based context services: These services follow on-demand retrieval processing, making it easy to add custom services. And it can handle API integration quite well.

- Database-driven management: These AI models have access to persistent storage through which it tries to manage the data as per the available data.

- In-memory caching: AI models use in-memory caching which enables Chatbots to give fast and relevant responses in active sessions.

- Distributed systems: Contextual capabilities are distributed among different agents and contextual awareness is added to each agent’s core feature.

If we understand context engineering in short, then you can consider it as the circulatory system of AI, which collects the data, structures the data and accordingly delivers the right information at the right moment. This gives AI Agents time to make decisions with full awareness.

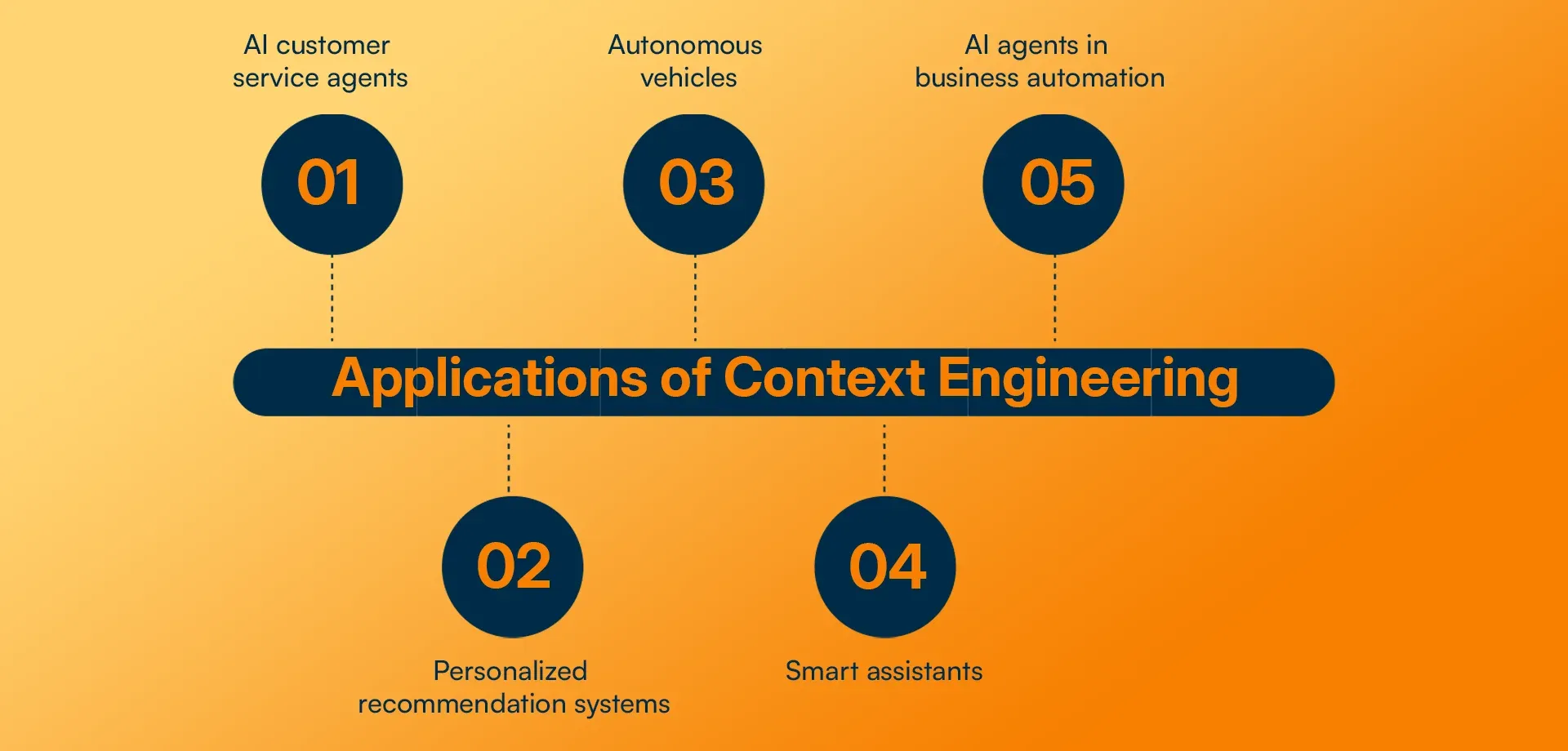

Applications of Context Engineering

Context engineering has many important applications, which are used for different sectors. Let's understand it in detail.

1. AI Customer Service Agents

According to experts, the market size of customer support will be 800$ bills by 2035. From this, we can understand the market potential of customer service, and customer is one of the biggest beneficiaries of context engineering. Such AI agents have the capabilities to remember purchase history, can remember previous complaints, and even communication style and can give you results based on that.

- They retain previous interaction history, so if you asked about a refund last week, today’s bot won’t start from scratch.

- They integrate purchase preferences to suggest relevant solutions.

- They provide multi-channel continuity: your web chat and phone call both share the same context.

- They even recognize emotional tone to respond empathetically.

Real-world Implementation Examples

- Chatbots with memory: Platforms like Intercom and Zendesk are rolling out AI agents that remember users across sessions.

- Escalation prevention: Context-aware bots solve more queries on first contact, reducing human handovers.

- Personalized recommendations: Instead of generic replies, bots tailor answers to individual cases.

2. Personalized Recommendation Systems

Contextual systems have the capabilities to provide personalized recommendations, thus improving the user experience.

Advanced Personalization Techniques

When recommendation systems work with contextual models, their context awareness is much better; apart from this, they also focus on many factors.

- Temporal context: It gives suggestions based on the exact time of the day or week.

- Location-based context: Food delivery apps like DoorDash and Uber Easts give you recommendations in the application based on the location of your office or home.

- Social context: It analyzes your friends' activities, peer reviews, due to which the models get awareness of the social context.

- Emotional/mood context: It suggests music or content based on your country, state, and you will also find this on YouTube and Netflix.

Industry Applications

- E-commerce: Context-rich AI recommends seasonal bundles or products, which is very beneficial for businesses.

- Streaming platforms: Streaming platforms like Netflix or YouTube recommend users based on watch time, interaction and day mood.

- Social media feeds: Platforms like Instagram or Facebook suggest new posts based on past interaction history.

- Finance: Contextual AI gives financial strategies or predictions based on market trends and past history, which helps businesses make smart and quick decisions.

3. Autonomous Vehicles

Tesla's Model 3 and Model Y are self-driving autonomous cars, which have been made possible to a large extent due to contextual models. Let's find out how contextual AI is transforming autonomous vehicles.

Environmental Context Processing

For self-driving cars, autonomous vehicles use environmental context processing and context engineering makes it more advanced. Apart from this, the vehicles also use the coach process.

- Real-time traffic data: Such vehicles can easily track roadblocks, congestion, reroutes, and this is possible only due to automatic sensors and models.

- Weather impact: Autonomous vehicles can easily analyze the external environment, like rain, fog, and roadside snow.

- Pedestrian & vehicle prediction: Self-driving cars anticipate humans or driver behaviour, and this is a very important mechanism for safety.

- Smart city data: Autonomous vehicles are integrated with road sensors and traffic systems, which allows vehicles to make accurate decisions.

Safety and Decision-Making

Context awareness ensures that vehicles can make the right call at the right time to maintain safety.

- Emergency response: It prioritizes emergency vehicles and clears routes for them.

- Adaptive driving styles: Self-driving cars adjust to the individual, and if there are children, they will drive smoothly or slowly for - them and choose energy-efficient routes so that they can get the best possible response. -** Integration with infrastructure:** Autonomous vehicles follow V2X vehicle-to-everything communication, in which the vehicle has a wireless connection with the road and all entities that can impact driving.

4. Smart Assistants and Voice AI

Conversational Context Management

- Ever been annoyed when Alexa or Google forgets your previous command? Context engineering fixes this by:

- Understanding multi-turn dialogues (“Book a flight”, “Make it evening”, “Add extra baggage”).

- Using intent disambiguation (recognizing “Apple” as fruit vs. company).

- Offering proactive help (reminding you of tasks based on past patterns).

Syncing cross-device context so your request continues seamlessly from phone to smart speaker.

5. AI Agents in Business Automation

Business automation tools that have context awareness can handle complex workflows much better than traditional rule bots. They use context in a way that is more efficient.

Process Context Awareness

Business automation tools powered by context can handle far more complex workflows than traditional rule-based bots. They use context like:

- Workflow state: AI agents know where a process is standing right now.

- Document and transaction history: AI business automation can automatically recognize relevant records.

- Organizational hierarchy: AI models know which requests to approve and which requests to delay.

- Compliance context: Contextual AI models ensure that safety and security regulations are being met.

Examples and Use Cases

- Intelligent document processing: Advanced AI agents can extract context from contracts.

- Customer onboarding: Many businesses provide an automatic KYC feature; for this, they use dynamic context validation and recently, Google Adsense has also started an automatic address pin verification process.

- Security monitoring: AI models have the capability to detect anomalies using context-driven data.

- Supply chain optimization: Logistic AI agents manage the supply chain according to real-time demand, which prevents product wastage and makes it easy to optimize resources.

If we understand in short, you will find the application of contextual engineering everywhere, from all apps to daily critical systems, which help our cities as well as businesses. Its capabilities of transformation from reactive to proactive are what make it so advanced, and it makes technology more of a partner than a tool.

Context Engineering vs Prompt Engineering

We have learned a lot about context engineering, but you must have heard about prompt engineering too, and many people think that both are almost the same, but in reality, it is not.

1. Key Differences and Relationships

Prompt engineering and context engineering often get compared, but they’re not the same thing.

- Prompt Engineering means refining the inputs (prompts) further so that the answer can be kept according to the user's request.

- Context engineering ensures that the AI has the right background knowledge and memory before processing the input.

Think of it this way: prompt engineering is like asking a precise question, while context engineering is like making sure the AI has already read the relevant chapters of the book before you ask. Both approaches complement each other.

2. Technical Distinctions

Prompt engineering is usually lightweight and query-focused. It’s like tweaking search terms for better results.

- Context engineering is heavier and infrastructure-driven; it involves building pipelines, databases, and APIs that feed relevant information into the model.

- In terms of scalability, prompt engineering works for quick tasks, but context engineering is essential for long-term, complex systems.

From my experience testing both, prompt engineering works well for one-off tasks, but when you need continuous, adaptive intelligence (like an AI agent that manages business workflows), context engineering is non-negotiable.

3. When to Use Each Approach

- Simple tasks: Prompt engineering alone is sufficient. For example, generating a blog outline.

- Complex, multi-step processes: Context engineering is essential. For example, customer onboarding that requires documents, compliance checks, and memory of prior interactions.

- Hybrid approaches: The best systems use both: prompts shaped by context.

4. Integration Strategies

In practice, companies often use context to inform prompts and prompts to refine context. For example, a retrieval-augmented generation (RAG) system first retrieves the right documents (context engineering) and then uses carefully designed prompts to frame the model’s response (prompt engineering).

This synergy ensures AI doesn’t just answer correctly, it answers in a way that’s relevant, consistent, and personalized.

Benefits of Context Engineering

Context engineering is a futuristic technology that will evolve a lot; that is why businesses are implementing context engineering and we can also see many long-term benefits of it.

1. Improved Model Performance

The biggest advantage of context engineering is that it makes AI models more accurate and reliable. It does not rely on static training; rather, these models adapt dynamically. This means that it performs complex decision-making better, and it even handles complex situations smartly, which normal AI gets confused about solving.

2. More Accurate and Relevant Responses

I have often felt that without context, AI's responses feel quite generic. But with context engineering, all answers feel fine-tuned for all situations.

For example, if someone in an e-commerce platform searches for “my last order,” the AI system will not respond with the general shipping policy; rather, it will carefully check the last order details and give a personalized answer to the user accordingly. This shift dramatically improves semantic understanding and eliminates irrelevant outputs.

3. Personalized User Experiences

In today's AI-driven world, users expect personalized results, such as an assistant that can adjust to their tone, recall their preferences, and anticipate their needs.

Over time, these systems adapt like friends and work according to the situational context, leading to higher user satisfaction and engagement.

4. Reduced Error Rate in AI Outputs

Context engineering acts like built-in quality checks in that it validates answers against a lot of stored context. Contextual awareness can catch contradictions in AI systems and also helps avoid hallucinations and errors before they reach the end-user.

This helps make AI interactions more trustworthy, especially in critical domains like healthcare, finance, or customer service.

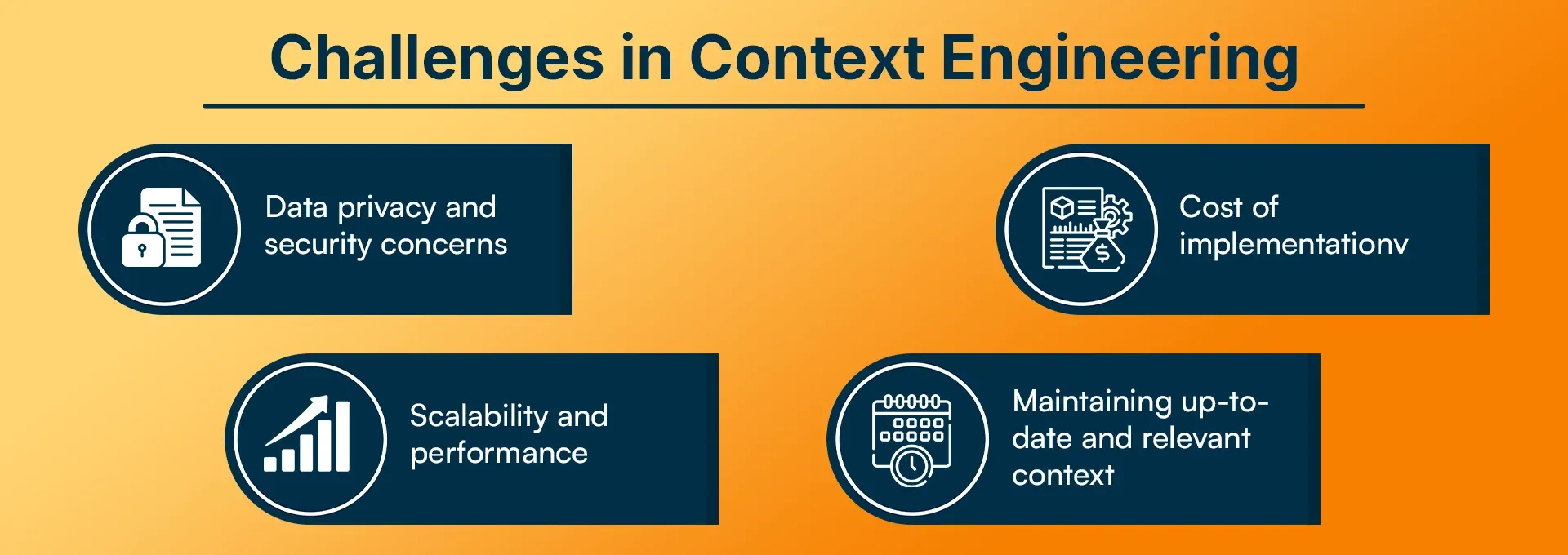

Challenges in Context Engineering

Context engineering is as high-end technology as it is changing, because it is still evolving,

1. Data Privacy and Security Concerns

The first major challenge is handling sensitive contextual data. Since context often includes personal histories, preferences, or even health information, ensuring privacy is critical. Engineers must implement strategies like:

- Anonymization and pseudonymization to strip personally identifiable information.

- Federated learning so models learn without exposing raw data.

- Differential privacy techniques to protect aggregated context.

- User consent mechanisms, giving people control over what context is stored and used.

Without strong safeguards, context engineering could quickly turn into a privacy nightmare.

2. Scalability and Performance Issues

Context processing is computationally expensive. Imagine retrieving relevant context for millions of queries per second; it requires massive optimization. It is also quite costly to deploy it for businesses and will be quite challenging for small businesses in South America and Southeast Asian countries.

Real-time retrieval of context at scale

- Storage optimization to handle both structured and unstructured context.

- Network latency in distributed systems.

- Load balancing to ensure performance during high demand.

The trade-off between depth of context and system speed is a constant engineering puzzle.

3. Maintaining Up-to-Date and Relevant Context

As we know context is dynamic; what was true yesterday may not be valid today. AI systems risk making mistakes if they rely on outdated information. To avoid this, engineers need:

Expiration policies to automatically remove old context.

- Change detection mechanisms to update context in real time.

- Conflict resolution strategies when multiple contexts overlap.

- Version control systems to track how context evolves.

Keeping context fresh is as important as collecting it.

4. Cost of Implementation

Building and maintaining context pipelines isn’t cheap. It requires investment in:

- Infrastructure for databases, APIs, and vector search engines.

- Skilled teams who understand both AI and knowledge management.

- Ongoing operational costs for updates and monitoring.

Organizations must carefully calculate ROI to ensure the benefits outweigh the costs.

5. Technical Complexity

Lastly, context engineering demands expertise in integration and maintenance. Teams need to:

- Align new context systems with legacy infrastructure.

- Train staff on specialized tools.

- Handle ongoing troubleshooting.

In my experience, this complexity is one reason many companies hesitate to adopt context engineering, yet those who invest early often gain the biggest long-term advantages.

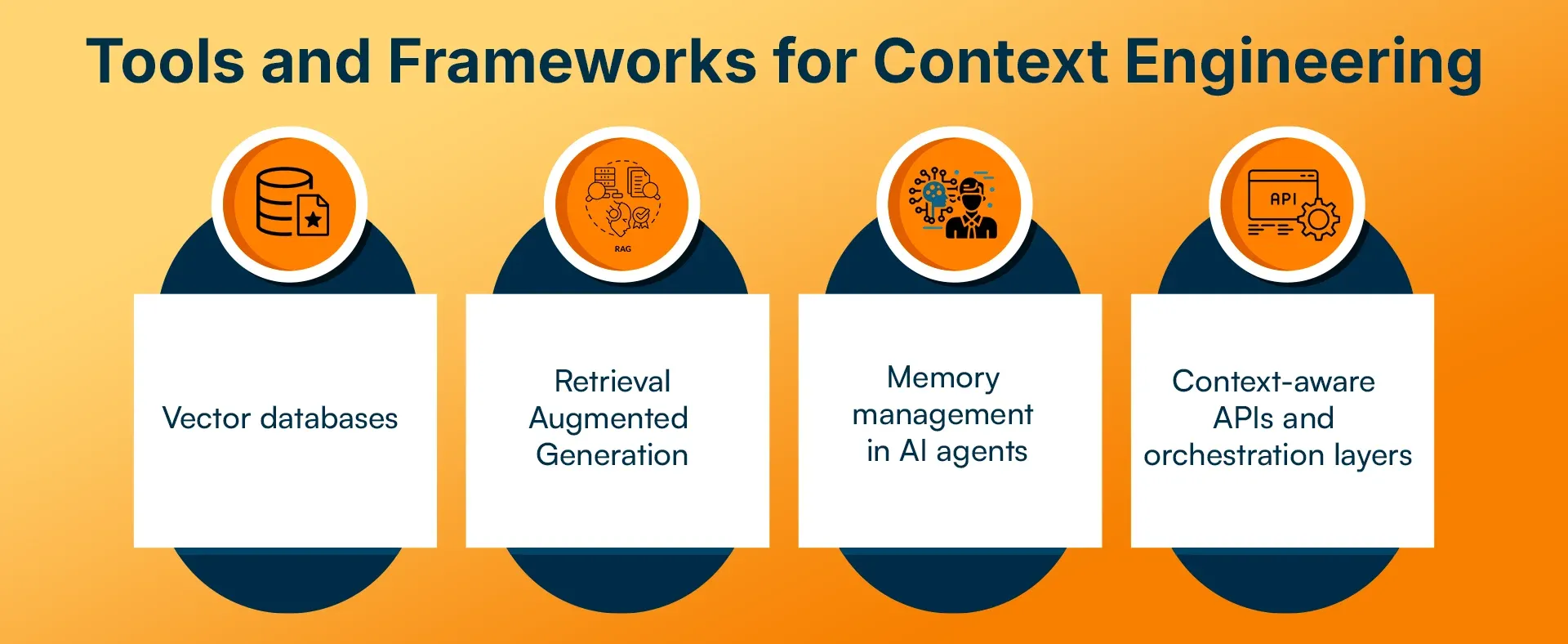

Tools and Frameworks for Context Engineering

Context engineering is not as easy as it seems, but it is practically impossible to implement it, because to implement it, many complex tools and frameworks are used for context engineering.

1. Vector Databases

Vector databases store data in numerical vectors, which makes AI applications more efficient for searches and contextual understanding.

Leading Platforms

Vector databases are the backbone of modern context engineering because they enable semantic search, finding meaning, not just keywords. Some leading platforms include:

- Pinecone: Cloud-native, fully managed, excellent for production workloads.

- Weaviate: Open-source, extensible, and integrates smoothly with ML pipelines.

- Chroma: Lightweight and developer-friendly, designed for embeddings.

- Qdrant: High-performance, scalable, with advanced filtering capabilities.

Key Features and Capabilities

Semantic similarity search for matching queries with the most relevant documents.

- Real-time operations so context updates instantly.

- High scalability to support enterprise-level workloads.

- Integration with ML frameworks like LangChain, Hugging Face, and LlamaIndex.

I have used Vector DBs in many of my projects, and it proved to be very useful to me as it helped a lot in "recalling" my system so that the system could get the most relevant information without bloating the model size.

2. Retrieval-Augmented Generation (RAG)

This is a framework that helps students enhance and improve their work by using external knowledge.

RAG Architecture Components

RAG combines retrieval systems with generation models, ensuring answers are both accurate and context-grounded. Generally, it includes:

-

Knowledge base construction (indexing documents, embeddings).

-

Query understanding (embedding the user’s request).

-

Context retrieval (ranking and selecting the best matches).

-

Generation (feeding retrieved context into the LLM).

Implementation Frameworks

- LangChain for chaining retrieval and LLM calls.

- Haystack for document search and QA pipelines.

- LlamaIndex (formerly GPT Index) for managing structured and unstructured contexts.

- Custom RAG architectures tailored to specific industries.

3. Memory Management in AI Agents

Any AI requires a lot of storage and management capabilities to operate, and context engineering pushes it to a great extent.

Memory Systems

For conversational AI, memory is context. Different types include:

- Short-term memory: active session details.

- Long-term memory: stored knowledge across interactions.

- Episodic memory: remembering entire conversations.

- Semantic memory: storing facts and concepts.

Tools and Libraries

- MemGPT: persistent memory for LLMs.

- AutoGen: enables multi-agent systems with shared memory.

- CrewAI: collaborative AI agents exchanging context seamlessly.

4. Context-Aware APIs and Orchestration Layers

API Management Platforms

Modern systems use APIs to deliver and update context in real-time. Advanced features include:

- Context-aware routing to direct queries based on user history.

- API versioning that respects context differences.

- Rate limiting based on the complexity of context queries.

- Monitoring & analytics for usage insights.

Orchestration Solutions

To keep everything running smoothly:

- Kubernetes manages containerized context services.

- Apache Airflow schedules context pipelines.

- Temporal ensures reliable, fault-tolerant context operations.

- Event-driven architectures trigger updates instantly when context changes.

In short, these tools form the tech stack of context engineering, from storing and retrieving context, to managing memory, to orchestrating large-scale workflows. Without them, context-aware AI would remain theory instead of practical reality.

Future of Context Engineering in AI

Context engineering has evolved a lot in the last decade, and many experts say that by 2035 many new models and algorithms will have been built in the context engineering sector.

1. Adaptive Context Management

The next phase of context engineering is about making context systems self-learning. Instead of engineers constantly fine-tuning data pipelines, future systems will:

Recognize new context patterns automatically.

- Evolve context models dynamically as environments change.

- Predictively preload context, anticipating user needs.

- Self-assess quality, discarding weak or irrelevant context on their own.

This will make AI far more autonomous and reduce the human effort needed to maintain contextual accuracy.

2. Agentive AI and Contextual Autonomy

We’re moving into the era of agentive AI autonomous agents that act on our behalf. These agents won’t just use context; they’ll gather it themselves.

- Autonomous context acquisition: AI agents independently fetch data from sensors, APIs, or interactions.

- Context negotiation: Multiple agents will share and negotiate context to collaborate effectively.

- Distributed intelligence: Teams of AI systems exchanging context to solve problems collectively.

- Decision autonomy: Agents making reliable decisions without human prompts, because context guides them.

This is where context engineering truly unlocks the “AI teammate” vision.

3. Integration with Human-in-the-Loop Systems

Even as AI grows more autonomous, humans will remain vital in context validation. The future will emphasize:

-

Crowdsourced context validation (users correcting AI memory).

-

Expert knowledge incorporation into domain-specific contexts.

-

Hybrid human-AI workflows, where humans provide critical judgment and AI handles the heavy lifting.

This balance ensures context remains accurate, ethical, and aligned with human values.

4. Predictions and Trends for 2025–2030

Looking ahead, several trends will shape context engineering:

- Quantum computing to supercharge context processing.

- Brain-computer interfaces (BCI) feed direct human context into machines.

- IoT expansion, creating vast streams of contextual data.

- 5G/6G networks enabling real-time context streaming with minimal latency.

- Standardization & regulation, with formal context protocols and compliance rules.

- Context-as-a-Service (CEaaS) platforms are emerging as a new industry.

All of these tech stacks' tools form context engineering, from storing data to retrieving context and managing memory, and accommodating large-scale workflows. Without it, context aware AI will only become theory, not a practical reality.

Conclusion

Context engineering is not a technical add-on, but rather acts as a model for AI systems that are truly intelligent, as we know that traditional AI models lack personalization and cannot handle tricky questions, and this is a problem that context engineering has largely solved. Contextual systems increase relevance, accuracy, and trustworthiness in all interactions.

The benefits for businesses sound clear, as it greatly enhances the customer experience, it also reduces costs and increases decision-making capabilities for the company, and it also gives them a competitive edge.

But deploying context engineering in your businesses is a very challenging task, hence you should always deploy from reputable firms like RejoiceHub so that you do not face privacy, scalability issues in future ,and you can make your operations smoother.

Frequently Asked Questions

Q1. What’s the difference between context engineering and prompt engineering?

Prompt engineering focuses on optimizing input queries to get the best possible response from an AI model. It’s about phrasing questions and instructions carefully. Context engineering, on the other hand, is about building the environment and memory around the model so it always has the right background knowledge. While prompt engineering is like asking the right question, context engineering is like giving the model the right book to read beforehand.

Q2. How do you implement context engineering in existing AI systems?

Implementation usually follows these steps: 1. Identify context needs: Define what kind of data is relevant (customer history, workflow status, external APIs). 2. Collect and store context: Use vector databases, knowledge graphs, or metadata schemas. 3. Integrate with AI models: Through pre-processing, runtime augmentation, or hybrid approaches. 4. Deploy and maintain: Ensure context freshness, update mechanisms, and monitoring tools. 5. Refine continuously: Gather user feedback, improve pipelines, and enhance accuracy.

Q3. What are the main challenges in context engineering?

The biggest hurdles include: Data privacy and compliance (e.g., handling personal data responsibly). Scalability (retrieving context in real time at enterprise scale). Cost of infrastructure (databases, APIs, cloud services). Complexity of integration with legacy systems. Mitigation strategies include federated learning, context expiration policies, cloud-native infrastructure, and phased rollouts to control costs.

Q4. Which tools should I use for context engineering?

The right tool depends on the use case: Vector databases (Pinecone, Weaviate, Qdrant) for semantic context retrieval. RAG frameworks (LangChain, Haystack, LlamaIndex) for combining retrieval with generation. Memory tools (MemGPT, AutoGen, CrewAI) for persistent and collaborative context. Orchestration solutions Kubernetes, Airflow, Temporal) for managing pipelines at scale. Evaluate based on scalability, ease of integration, and ROI.

Q5. How do you measure the success of context engineering implementations?

Key metrics include: Accuracy & relevance of AI responses. User satisfaction scores (CSAT, NPS). Operational efficiency (fewer escalations, reduced manual intervention). Cost savings from improved automation. ROI calculated from increased revenue or reduced errors. Some companies also track hallucination rate reductions as a measure of success.

Q6. What skills do teams need for context engineering?

Teams need a mix of: AI/ML expertise (model integration, embeddings). Data engineering (pipelines, APIs, databases). Knowledge management (taxonomy, ontology, semantic search). Security & compliance (privacy-first context handling). Upskilling staff or hiring specialists in retrieval systems and knowledge graphs is often necessary.

Q7. How does context engineering handle data privacy?

Through: Anonymization of sensitive data. User consent frameworks for transparency. Differential privacy & federated learning to limit raw data sharing. Compliance alignment with GDPR, HIPAA, and local regulations. This ensures contextual systems are ethical and trustworthy.

Q8. What’s the future of context engineering?

The next 5–10 years will see: Context-as-a-Service (CEaaS) platforms. Quantum and BCI-powered context pipelines. Standardize context protocols across industries. Human-AI collaboration models for validating context.